How do we justify the need to make our organizations more resilient?

Quick Primer: in this context, a shorthand for resilience is adapting to unexpected changes in an environment in an attempt to return to stability. It’s not robustness, like an automatic fail-over of a system without human intervention (removing a server from a pool behind a load balancer for example).

Resilience Engineers often say we’re looking to improve the resilience of organizations because that’s what allows complex systems to function. That sounds great on paper – let’s be more resilient! How much do I budget, can I save some for a rainy day, and most importantly what SaaS should I buy my resilience from?

Specifically, you can’t have resilience without extra capacity. Resilience is by its nature imperfect in its efficiency. That’s not to say it is inefficient – quite the opposite. It has to be exploratory across typically untrodden or overlooked paths for optimizations (why else would we ignore an optimization?). This feeling of inefficiency is, then, the unknown cost ahead of time and how much investment is needed to reach “just enough resilience” to withstand the next storm.

It’s also unlike shoring up inefficiencies in optimizing code, deploy queue speeds, memory footprints, etc. We have no strong measurements on resilience for an organization, where it starts/stops, and how to fully justify the resilience after the fact. This is an excellent time to remind folks that I am not in sales.

I cite John Allspaw (because that sounds much classier than the truth of outright theft):

Resilience is the story of the incident that didn’t happen

Which means to say that it’s hard if not impossible to measure what savings you’ll gain from that work. “Tell me when you’ll have an incident in the future and how much downtime you’ll save” (which is why we tend towards reactivity – feeling the pain and responding to it, notably with action items as the cure-all). We don’t know when we’ll need precise expertise or in what “quantity” if such a thing can be measured – but we know we’ll need it!

With this in mind, it’s challenging making the case that resilience is absolutely essential. What’s your ROI for resilience, your KPIs for understanding your system, the OKRs for a project on leveling up your team? Often your best attempt is to focus on robustness, which can feel more “solid” (“I’m going to expand the pool of servers before the influx of traffic this holiday weekend”) and potentially gain some expertise alongside.

Oddly enough, despite our efforts in bureaucracy, resilience still happens. “Great!” the unknowing manager says, “we’re getting it for free and we didn’t have to budget anything for it!”. Well, no. When your next incident strikes (which is unavoidable in even the best of systems), that will come with a cost – customer trust, lost revenue, public communications, and of course engineering time away from the planned roadmap.

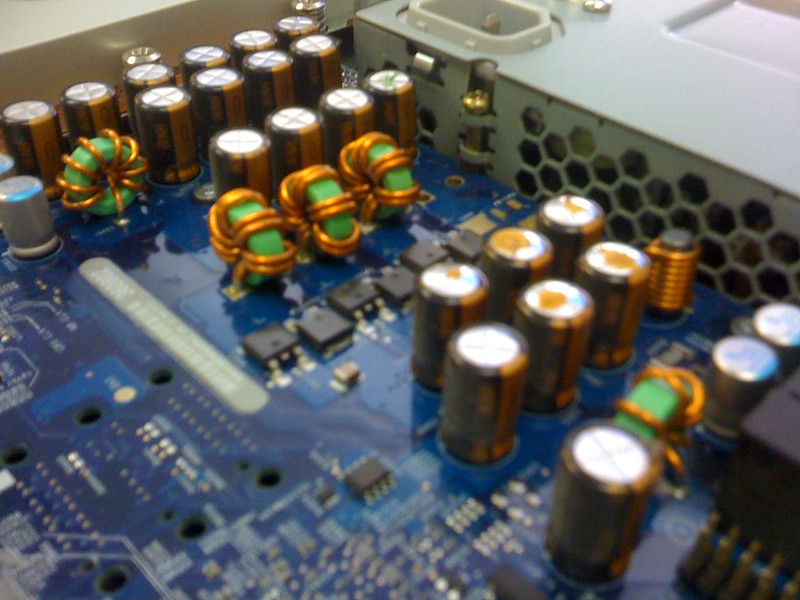

We build capacity for our systems to withstand the unexpected when it relates to the robustness of our systems (the technical side of the socio-technical) – data redundancy, fail-over mechanisms, etc. – with a similar hand waving and prognosticating of doom and gloom, often because it’s easier to say “We’ll get 2x traffic, we need 3x spare capacity”, and running the numbers on that feels very tactile, concrete.

If we want to keep an emphasis on resilience, building up capacity beyond simply what our servers and networks can handle but instead focus on what people can handle, then we need to improve how we’re highlighting where to find that resilience. Find sources of building resilience in your organizations first to highlight the need. I have ideas on that I’ll cryptically leave for another post/conference talk.

Resilience exists and is ephemeral. It is amorphous but is not limitless. And most importantly of all, it’s is absolutely essential and very likely one of the places we invest in least.

2 comments for “Justifying Resilience Work”